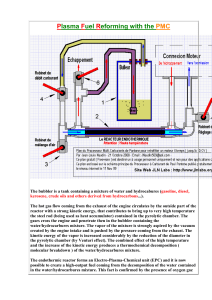

652745 2016 EDMXXX10.1177/1555343416652745Journal of Cognitive Engineering and Decision MakingSafety-Related Rule Violations The Spectrum of Safety-Related Rule Violations: Development of a Rule-Related Behavior Typology Sebastian Brandhorst and Annette Kluge, Ruhr University Bochum, Bochum, Germany In a production context, safety-related rule violations are often associated with rule breaking and unsafe behavior even if violations are not necessarily malevolent. Nevertheless, our past experiments indicated a range of strategies between violation and rule compliance. Based on this finding, different types of rule-related behavior for managing organizations’ goal conflict between safety and productivity are assumed. To deepen the understanding of rule violations, the behavior of 152 participants in a business simulation was analyzed. Participants operated a plant as a production worker for 36 simulated weeks. In each week, they could choose to comply with safety rules or to violate them in order to maximize their salary. A cluster analysis of the 5,472 decisions made on how to operate the plant included the severity of the violations, the number of times participants changed their rule-related strategy, and the extent of failure/­success of these strategies. Five clusters of rule-related behavior were extracted: the compliant but ineffective “executor” (15%), the productionoptimizing and behavioral variable “optimizer” (13%), the successful and compliant “well behaved” (36%), the notoriously violating “inconvincible” (29%), and the “experimenter” (7%), who does not succeed with various violating strategies. Keywords: goal conflict, strategy change, failure, unsafe acts, person approach Address correspondence to Sebastian Brandhorst, Industrial, Organisational and Business Psychology, Department of Psychology, Ruhr University Bochum, Universitätsstr. 150, 44801 Bochum, Germany, sebastian.brandhorst@rub.de. Journal of Cognitive Engineering and Decision Making 2016, Volume 10, Number 2, June 2016, pp. 178­–196 DOI: 10.1177/1555343416652745 Copyright © 2016, Human Factors and Ergonomics Society. Introduction We’d like people to remember that Sheri was 23 years old, the day she went to work at UCLA [University of ­California, Los Angeles], for the last time. That she was a young girl, living her life to the fullest. . . . She really, really wanted to make a difference in the world. She really wanted to change it. (U.S. Chemical Safety Board [CSB], 2011) The tragic case of Sheri Sangji, a research assistant at UCLA in 2008 who died due to serious burns 18 days after a laboratory accident, is one of many examples of the consequences of human error for which she was not responsible. In this case, Sangji’s supervisor violated basic rules for lab safety, which led to the young woman’s death (Morris, 2012). On a larger scale, the Piper Alpha catastrophe in 1988 culminated in US$3.4 billion of property damage, massive maritime pollution, and 167 fatalities (Taimin, 2011). This incident, along with an estimated 70% of all accidents in the production industry, was rooted in rule violations (Mason, 1997). In this paper we aim to gain some insight into the different phenotypes of violating behavior. Even with the outcome of tragic events in mind, there seem to be some potential benefits of rule violations, for example, detecting potentially harmful rules or regulations. By identifying different types of rule-related behavior, there are some opportunities to prevent unintended events but also to meet employees’ demands and to apply their abilities where they are needed. Safety-Related Rule Violations Unsafe Acts: Violation and Optimization Previous research in human factors (e.g., Reason, 2008), as well as our own research (Kluge, Badura & Rietz, 2013), has shown that improving safety in organizations requires an investigation of the interplay between organizational and person-related factors affecting rule violations. For this reason, the following paragraph will shape the term violation to enable a consistent understanding. One of the most cited and accepted concepts regarding unsafe acts is Reason’s (1990) human error. Human errors are divided into unintended and intended actions, whereby slips and lapses are the manifestations of unintended actions that deviate from a given rule. Even when a mistake arises from intended actions, it is still a “classic” error. This type of error emerges from the misapplication of a good rule or the application of a bad rule. Nonetheless, following the rule was intended. The rule-related behavior of interest in this case, the safety-related rule violation (henceforth shortened to “violation”), is characterized as a deliberate but nonmalevolent deviation from safety rules and regulations (Reason, 2008). To distinguish between errors and violations, violations must fulfill two conditions: The violated rule was known and the act of violating was intended (Whittingham, 2004). Although absent in theoretical considerations, a tendency to optimize the outcome by adjusting the standards is reported within the literature dealing with catastrophes or disasters, such as the explosion of the Challenger space shuttle in 1986 (Starbuck & Milliken, 1988). The authors sum up that the more fine-tuning (this term was coined by Starbuck and Milliken and means a rising severity of violating safety standards) that occurs, the more likely something fatal will arise outside the boundaries of safety. These results are in line with Reason’s (2008) Swiss cheese model and Verschuur’s operating envelope (Hudson, Verschuur, Parker, Lawton, & van der Graaf, 2000). Reason’s Swiss cheese model describes unsafe acts as holes in defense layers, which depict different organizational levels that should prevent unintended events or outcomes. The operating envelope defines acts that are safe in the center of safety boundaries. With increasing 179 quantity or extent of unsafe acts, they move away from the safe center to the critical boundaries. In both models, when using the term fine-tuning, this behavior would increase the holes in the defense layers or move a system to the boundaries of safety and amplify the likelihood of accidents and disasters. Research Approaches to Violations Authors of previous research covering topics relating to rule-related violations have considered the intention, situational factors, and the frequency of violations (Reason, 1990). Later models also take into account a range of factors, such as organizational or work system factors (Alper & Karsh, 2009). However, so far, researchers have not considered the qualities of violations, meaning whether all parts of the rule have been violated, only a small part, or a particular section. For such a consideration, the fusion of system and person approaches (Reason, 2000) is beneficial but brings with it some potentially negative social connotations that might inhibit an application. Therefore, the content-based boundaries of these two approaches will be briefly outlined and discussed. Within the system approach to violations, the focus is on the organizational preconditions rather than on human behavior. Although a system approach proclaims to take the focus away from blaming or scapegoating someone, and instead moves toward reaching an understanding of an accident’s occurrence (Whittingham, 2004), it should not come to be seen as sacrilegious to analyze an employee’s actions in detail, which is a person approach. For a holistic view on rule-related behavior, research could benefit from incorporating the person approach, especially in the case of violations. This type of human error is, by definition, accompanied by intention. Another reason not to abandon the person approach lies in the potential beneficial value of violations, described by Besnard and Greathead (2003). The authors extend the term violation with the aspect of cognitive flexibility of the violating person who handles faulty procedures by adapting his or her actions to organizational requirements that cannot be met with (at least part of) the given orders. But investigating adaptive behavior premises a person approach. 180 June 2016 - Journal of Cognitive Engineering and Decision Making The utility of merging the system and person approaches to safety-related rule violations is demonstrated by the behavioral adaptation to changed circumstances caused by the treatment of detected rule violations found in previous work by our research group (von der Heyde, Brandhorst, & Kluge, 2013): When the number of rule violations detected by the system declined, the hidden tendency toward the socalled soft violation increased. This violating but undetectable behavior bears the potential to evolve from the level of an almost-accident to a severe catastrophe by bringing the operator or system to the edge (Hudson et al., 2000), but it can also shed some light on faulty procedures (Besnard & Greathead, 2003). The Study’s Purpose We coined the term soft violation due to our observation of behavior that does not fit into the terms compliance and violation (von der Heyde et al., 2013). The dichotomous perspective on rule-related behavior (compliance or violation) does not cover the fine-tuning character implicitly depicted by the models of Reason (2008) and Verschuur (Hudson et al., 2000) and explicitly described by Starbuck and Milliken (1988). Further efforts need to be undertaken to develop a conceptual framework to deduce other possible strategies of noncompliance or optimization, respectively. The novel idea in the present paper is to enrich the system approach and find an action-oriented description of rulerelated strategies. The potential strategies for this person approach will be identified first. We assume a range of strategies that differ in their quality of rule-violating behavior. The aim is to conduct a cluster analysis to identify different types of rule-related behaviors, which differ on three dimensions described in the following paragraphs. Dimensions of Rule-Related Behavior Most theories concerning rule-related behavior have at least one characteristic in common: The operator’s action is treated as an isolated and single event (although the idea of routine work is mentioned in some theories; Reason, 1997), which merely deductively includes a repeated execution of a task. Desai (2010) also implied that routine work is repeated work with different options of behavioral patterns, but an explicit consideration seems to be lacking. In this section, a set of factors will be considered that embody the person and system approaches to operators’ behavior within their routine and therefore repeating work task, with the aim of deriving specific rule-related behaviors. The first factor contains the results obtained from analyzing the rule-related behavior in a repeated complex task (further described in the Method section). A view on the operator’s actions enables the calculation of a value that describes an overall magnitude of committed violations. Action-related theories, such as the Rubicon model of action phases (Heckhausen & Leppmann, 1991) and the action regulation model (Frese & Zapf, 1994), incorporate the assessment of an action’s outcome by the acting person. Reason (2000) mentioned with respect to an observed behavior that it can be stated as rewarding or unrewarding. This second aspect will be taken into account to describe the operator’s rule-related behavior in the sense of achieving success by implementing a certain behavioral strategy. Different behavioral strategies are linked to different outcomes. If the expected outcome is achieved, the behavior (in the sense of the chosen strategy) is assumed to be perceived as rewarding; otherwise, it will be experienced as unrewarding, which within this paper will be termed failure. With this factor, a system-oriented value is considered, meaning that the outcome of the operator’s action is taken into account. Within his efficiency–thoroughness trade-off (ETTO) principle, Hollnagel (2009) discusses failure as a possible result of a trade-off between efficiency and thoroughness. He argues for a focus on different types of performance variability rather than an outcome-oriented perspective. This unidimensional (efficiency vs. thoroughness) and descriptive (performance variability) approach can be augmented with explanatory potential by means of a multidimensional characterization of rule-related behavior. From our perspective, the performance variability is depicted by a range of strategies that differ in their extent of rule compliance/violation and by an outcome assessment as success or failure. Safety-Related Rule Violations The third dimension taken into account is the behavioral adjustment. With regard to the aspect of action regulation, the assessment of the executed strategy provides feedback, which will then be considered for the subsequent goal development and plan generation (Frese & Zapf, 1994). The model of planned behavior also relates to some past behavior (Ajzen, 1991). In line with the aforementioned action- or behavior-­ related theories, a behavioral adaptation by the operator within a routine work scenario is assumed, which will be described as the extent of a behavior shift. A change in the choice and application of a particular behavioral strategy is assumed to be possibly provoked by the feedback perceived from a certain outcome that is assessed as a success or failure. However, this conclusion should not lead to the possible fallacy that a high rate of failure prevents the strategy concerned from being maintained or that it will automatically lead to a behavior shift. For this conceptual assumption, the behavior shift is the third factor considered in describing the typology of operators’ behavior. These three factors—violation score, failure, and behavior shift—are considered for a subsequent cluster analysis in order to identify different types of rule-related behavior. Method Participants in the present study consisted of 152 students (38 female) of engineering science from the University of Duisburg-Essen with a mean age of 21.32 years (SD = 2.39). They were recruited by flyers and before lectures from January to July 2013 and were compensated with €50. The study was approved by the local ethics committee. To investigate safety-related rule violations, our research group applied a simulated production plant (WaTrSim; Figure 1). This simulation depicts a wastewater treatment plant that was developed in collaboration with experts in automation engineering at the Technical University of Dresden, Germany, in order to achieve a realistic setting depicting a process control task with high face validity (Kluge, Badura, Urbas, & Burkolter, 2010). The simulation was implemented to control an actual functioning plant model. Participants are in the role of a control room operator and start up the plant for segregating 181 delivered industrial wastewater into its components (solvent and water) to maximize the production outcome and to minimize failure in segregation. There are two kinds of crucial plant sections the participants adjust: the valves and the tanks. Operators have to adjust the flow rate of the valves determining the speed of filling and clearance of the tanks. Additionally, the capacity of the tanks must be considered. The simulation control phase in this study contains 58 stages that are split into 10 training stages and 48 production stages. These stages depict a whole production year, with 12 stages each quarter. Each stage takes 3 min. For the complex task of starting up the plant, two procedures are at hand to lead the plant into a balanced state of production. The first is a short and faster, and therefore more productive, one (in terms of output) and contains eight steps (highrisk procedure). However, the disadvantage of using this high-risk procedure is that the plant enters a critical system state, which puts the plant in a vulnerable condition with an increased probability of a deflagration. Despite the critical system state, the plant operates and segregates wastewater as it would in a safe state, but there is a potential loss of components and production due to a deflagration. To guarantee the comparability of circumstances, the deflagration probability is preprogrammed. If the participants violate the safety-related rules during the production week with predefined deflagration possibility, they will be informed about the damage that occurred that makes the plant (and therefore the interface) inoperative in the respective week with a loss of all production outcome and salary. Alternatively, there is a lower-risk procedure, which consists of the high-risk procedure extended by three additional steps. Due to these extra steps, more time is required to start up the plant, and a lower production outcome (approximately 1,100 liters) is generated compared with the high-risk procedure (approximately 1,300 liters). The advantage of this lower-risk procedure is that it avoids a critical system state. Consequently, the participants are placed in a goal conflict between safety (rule compliance) and productivity (rule violation). 182 June 2016 - Journal of Cognitive Engineering and Decision Making Figure 1. The WaTrSim interface with its components (valves, tanks, and heatings) and performance gauges. Procedure To prevent social desirability, the participants were given the cover story that the study was aimed at evaluating the effectiveness of complex task training. They were told that they could discontinue at any time (in terms of informed consent) and that the payment given for the 5-hr study would depend on their performance and could reach “up to” €50. Due to ethical considerations, all participants were actually paid €50 after the participation, irrespective of their production outcome. Participants had to complete a knowledge test regarding wastewater treatments (­self-developed), a general mechanical-technical knowledge test (self-developed), and a general mental ability (GMA) test (Wonderlic, 2002). They were introduced to WaTrSim (Figure 1) and received training for the high-risk procedure, which was completed by a self-conducted test to check their ability to start up the plant. The production phase started with the first 12 production stages. After this first quarter, the participants were informed that an accident had occurred at another part of the plant and that a new and safe procedure would be mandatory from now on. The hazardous but more productive high-risk procedure was now ­ f­ orbidden, and the safe, lower-risk procedure was introduced and declared as mandatory. From this point on, conducting the high-risk procedure was regarded as a rule violation. Participants were then trained and tested in conducting the safe, lower-risk procedure, with an emphasis on the safety-related substeps leading to a safe startup procedure to ensure that they were aware of the differences between the two procedures. They handled the plant for the next three quarters, and after the production phase was completed, further personality questionnaires were administered regarding the participants’ presence (Frank & Kluge, 2014), cautiousness (Marcus, 2006), self-interest (Mohiyeddini & Montada, 2004), and sensitivity toward injustice (Schmitt, Maes, & Schmal, 2004). Finally, the participants were debriefed and paid. Content-Related Differences in the Startup Procedures Both procedures, the high-risk procedure and the lower-risk procedure, are divided into two parts. The first part of each is crucial for causing or preventing the critical system state (upper-left and upper-right light gray squares in Table 1) and is depicted by way of example in Figure 2. The safety-related substeps of the 183 Soft Violation Compliance Defiant Compliance Scrape Violation — — — Classic Violation — — — Optimizing Violation — — — — — — Order Straight Reactance Contravention High-Risk Procedure Note. Checks represent correctly conducted substeps (all steps of each rectangle must be conducted); crosses represent violated substeps (merely one substep must be violated in each rectangle); dashes represent nonappendant substeps. Safety-related substeps Disable follow-up control Valve V1: flow rate 500 liters per hour Wait until capacity of R1 > 200 liters Valve V2: flow rate 500 liters per hour Wait until capacity of repository R1 > 400 liters Non-safety-related substeps Valve V3: flow rate 1,000 liters per hour Wait until capacity of heating bucket HB1 > 100 liters Adjust heating bucket HB1 Wait until heating bucket HB1 > 60°C Activate counterflow distillation fractionating column (Kolonne) K1 Valve V4: flow rate 1,000 liters per hour Substep Lower-Risk Procedure Table 1: Combination of Possible Ways of Execution and the Resulting Strategies 184 June 2016 - Journal of Cognitive Engineering and Decision Making Figure 2. Depiction of safety-critical substeps by conducting the first part of lower-risk procedure. (a) Disable follow-up control. (b) Valve V1: flow rate 500 liters per hour. (c) Wait until repository R1 > 200 liters. (d) Valve V2: flow rate 500 liters per hour. (e) Wait until repository R1 > 400 liters. lower-risk procedure, which prevents accidents, are depicted as an example. The adjustment of valves V1 and V2 is joined (follow-up control), meaning that a regulation would affect both. A simultaneous opening up would mix different concentrations of solvent and would provoke the critical system state in terms of a potentially explosive fluid. To prevent this critical system state, the valves’ follow-up must be disabled (Figure 2a) in order to adjust the valves in succession (Figures 2b and 2d) under consideration of specific levels of the mixing repository R1 (Figures 2c and 2e). In this way, the solvents’ concentration will be balanced. Nevertheless, the process of mixing the different concentrations of solvent remains a critical phase within the system and procedure. If some substeps of the first part of the lower-risk procedure (Lower-Risk Procedure 1) are not correctly conducted (e.g., the operator did not wait until the tank R1 contains a least 200 liters), a critical system state results and there is a risk of a deflagration. A soft violation, as described earlier, would mean that although the safety-critical steps are conducted as well as the non-safety-related substeps, the latter are not implemented in the prescribed manner. For a soft violation, the flow rates of the downstream valves (V3 and V4) may be adjusted to be higher than demanded in order to raise the return from the distillation and therefore the production output. The straight contravention, as another example, would mean leaving out the three substeps (rule violation), setting V1 to, for example, 700 liters per hour (procedure violation of safety-related segment) and opening V3 at 1,400 liters per hour (procedure violation of non-safety-related substeps) before tank R1 reaches the capacity of 400 liters. This action would result in an earlier production start and a more effective return from the distillation. As the second part of both startup procedures (High-Risk Procedure 2 and Lower-Risk Procedure 2) does not contain any safety-related substeps, these parts are equal (bottom dark gray rectangle in Table 1). In summary, the following two aspects are relevant for the consideration of the two available procedures: 1. The first part of both procedures is safety related and is differentiated by the startup procedures. 2. The second part of each procedure is not safety related and is equivalent. From the perspective of the person approach, there are three decisions to consider: (a) Which procedure is chosen? (b) Are safety-related substeps affected? (c) Are non-safety-related substeps affected? These decisions are made in a trade-off space between risk and reward. The high-risk procedure is forbidden from a certain Safety-Related Rule Violations point onward during the experiment. A decision to carry out this (forbidden) procedure is a rule violation (risk) to gain higher output and payment (reward) and is made by leaving out the three additional safety steps to start up the plant faster. Additionally, however, and these are the second and third decisions, the operator can decide to violate the substeps within a procedure as well. When the safety-related substeps are violated, the plant enters a critical state of system (risk) but can produce sooner and more (reward). Violating the non-safety-related substeps involves no threat to the system’s stability but allows some outcome optimization (reward). As shown in Table 1, there are, for example, certain flow rates to adjust (valves) and capacities (tanks) to keep in mind. Violations are also possible if the operator does not stick to these standards. At this point, the following basic consideration is crucial: Even if the rule is followed by conducting the lower-risk procedure (in terms of conducting 11 steps), a violation can still be present if the standards of the substeps are violated. In Table 1, the compliance or violation of rules and substeps is illustrated by checks or crosses. For a clear conceptual differentiation, we consider two kinds of compliance or violation, respectively: rule violations, which refers to conducting the high-risk procedure or the lower-risk procedure, and procedure violations, meaning the decision whether or not to comply with the given standards of each substep within each procedure. As shown in Table 1, the combination results in eight different strategies. The first four strategies (Table 1, vertical tags, left to right) do not constitute any rule violations and constitute only procedure violations (except for the compliance strategy, which is, by definition, a nonviolation). These procedure violations can be placed in the safety-related segment of the procedure, in the non-safety-related segment, or in both to speed up the startup process. To be highly productive, the rule must be violated. When some procedural violations are added on top, the productivity is raised to maximum. These combinations of procedural and/or rule-related violations imply an order in terms of being distinct with regard to their quality (extent) of violation. They range from total compliance (both rule compliance and procedure c­ ompliance) 185 to total violation (both rule violation and procedure violation, named here as “straight contravention”). The Machine-Readable Criteria For further investigation, every decision for one of the eight strategies of every operator in every week must be identified within the log files provided by WaTrSim. The framework depicted in Table 1 allows the deduction of criteria that can be formulated in a machinereadable way. In total numbers, there are 152 operators (participants) who each decide 36 times per simulated production year. Accordingly, 5,472 decisions were made. Considering that every startup consists of 180 states of each of the 11 relevant plant sections, 10,843,560 pieces of data needed to be taken into account for a proper characterization. This magnitude of data enhances the likelihood of making some errors (mistakes or lapses) if this analysis and assignment were to be done by hand. Therefore, the following four rules were syntactically implemented, each generating a true or false statement. Operationalized Dimensions of RuleRelated Behavior To address the second aspect of the present research, identifying different types of rulerelated behavior, the set of derived variables (violation score, failure, and behavior shift) will be operationalized in the following paragraphs. Based on the eight qualitatively different types of violation worked out earlier, the applied strategies of each participant during each production week are coded from 0 to 7, corresponding to the increasing extent of violation. Thus, compliance is coded 0 and straight contravention is coded 7. For the violation score, all 36 decisions of the participants are added together; therefore, the score can range from 0 to 252. A high violation score describes an increased tendency of rule-violating behavior. The acceptance of unsafe and risky actions brings with it more violations and fine-tunings, both qualitatively and quantitatively. By contrast, a low violation score stands for cautiousness and rulecompliant behavior. 186 June 2016 - Journal of Cognitive Engineering and Decision Making As no information was available on the participants’ perception and outcome evaluation, an artificial criterion was used to decide whether or not a conducted strategy was successful. The procedures (high-risk and lower-risk procedures) are linked to a predefined production outcome, to which the participants receive feedback during the production phase. There are several numerical and graphical depictions of the current and accumulated production outcome in the user interface (Figure 1). By conducting the lower-risk procedure, an output of 1,100 liters can be expected, whereas applying the high-risk procedure leads to 1,300 liters. These two benchmarks are used to determine whether a chosen procedure was a success or a failure. The strategies coded from 0 to 3 (associated with the lower-risk procedure; Table 1) are expected to reach an output of 1,100 liters, and the strategies coded from 4 to 7 (associated with the high-risk procedure; Table 1) should enable the 1,300-liter benchmark to be reached. If this benchmark is not achieved, the respective week is noted as a failure. Similar to the violation score, the number of failures is counted and can therefore range from 0 to 36. If the failure characteristic is highly manifested, the performance of the chosen strategy is unsuccessful, irrespective of whether it is more violating or more compliant. On the other hand, if the failure value is low, the performance of the chosen strategy is successful in most cases. A change in the chosen strategy between two successive startups is also given a numerical expression. For example, if in Week 13 a classic violation (coded 4) was conducted, and the following week an order reactance (coded 6) was identified, the behavior shift amounts to 2. All differences between the chosen strategies in successive weeks are added together and express the magnitude of the overall behavior shift. This characteristic describes whether the behavior shown is variable (high) or stable (low). Based on this definition, the identified clusters will first be described, then interpreted and named. Manipulation Check and PersonRelated Variables To ensure the participants’ awareness and acceptance of the simulation as a workplace, we used the presence scale as a manipulation check. As part of the study, a set of person-related variables was also assessed. These measurements are used for a more detailed description and interpretation of the targeted rule-related behavior types. The deployed scales are validated against a rule violation questionnaire, which describes 10 different rule-related everyday-life dilemmas (e.g., “I’d rather risk being caught speeding than be late to an important meeting”) with a 4-point Likert scale from agree to disagree. For a more detailed description of these scales and their theoretical and model-based link to rule-related behavior, the investigation by von der Heyde, Miebach, and Kluge (2014) is recommended. Presence describes the state in which acting within the simulated world is experienced as a feeling of “being” in the simulated world (Frank & Kluge, 2014). It is measured by an 11-item scale that is rated on a 6-point Likert scale from 1 (totally disagree) and 6 (totally agree). The Cronbach’s α of the scale is .78. Cautiousness describes a person’s tendency to avoid risky situations. It is a subconstruct of integrity (Marcus, 2006) within a measurement for practice-oriented assessment concerning counterproductive work behavior. The seven items (e.g. “I am reasonable rather than adventure seeking”) are rated on a 5-point Likert scale. The Cronbach’s α of the scale is .75. Sensitivity toward injustice. This concept, operationalized by Schmitt et al. (2004) is divided into the victim’s perspective (being disadvantaged with respect to others), the observer’s perspective (not being involved but recognizing that someone is being treated unfairly), and the perpetrator’s perspective (perceiving that one is being unjustifiably advantaged). The nine items, rated on a 5-point Likert scale, show a Cronbach’s α of .86. Self-interest can be described as an action that is “undertaken for the sole purpose of achieving a personal benefit or benefits,” such as tangible (e.g., money) or intangible (e.g., group status) benefits (Cropanzano, Goldman, & Folger, 2005, p. 285). This concept is measured with five items rated on a 6-point Likert scale. The Cronbach’s α is .83. Safety-Related Rule Violations The GMA was measured using the Wonderlic Personnel Test (Wonderlic, 2002). This scale assesses verbal, numerical, and spatial aspects of intelligence. Participants had 12 min to process 50 items. All correct answers are summed to form a total score of 50 points maximum. The Cluster Analysis The three factors of safety-related behavior were tested for their dependence. A bivariate correlation analysis revealed a significant correlation between behavior shift and violation score (r = .44, p < .01) and between behavior shift and failure (r = 26, p < .01). The characteristics violation score and failure were not significantly correlated. Due to the different scales used to assess the characteristics, a z transformation was conducted. To identify different types of rule-related behavior, a hierarchical cluster analysis using the complete linkage method was conducted with IBM SPSS 19. This method is associated with a strict homogeneity within the clustered groups and tends to build up a row of small groups (Schendera, 2010). It was chosen as its properties correspond to the aim of this analysis. Results A first manipulation check was conducted to ensure the participants’ acceptance of the simulation and their role as control room operator. The participants’ presence is significantly higher than the scale’s mean (range = 1–6; M = 3.35), t(147) = 4.90, p < .001. An item-based analysis showed significantly higher means for statements such as “I felt that I was part of the simulation” (M = 3.46), “I imagined that I was working in a real environment” (M = 3.50), and “I was able to assume the role of an operator” (M = 3.71). To answer the first research question regarding the quantities of potential possible strategies (quality of violation), the choice rate for each strategy was counted and is represented in the left bar of each strategy in Figure 3. In terms of the conducted procedure (high-risk procedure or lower-risk procedure), 86.90% of all decisions were made in favor of the mandatory lower-risk procedure when summing up the applied strategies based on the lower-risk procedure (compliance, soft violation, defiant compliance, and 187 scrape violation). Decisions in favor of the forbidden high-risk procedure, on which the classic violation, optimizing violation, order reactance, and straight contravention are based, were made in only the remaining 13.10% of cases, or 717 times. Comparing these data with the distribution of critical system states that emerged, we find within all of the 5,472 startups of the plant, a total of 2,116 critical system states (38.67%) were detected by the system. It should be recalled here that a critical system state is originally directly associated with conducting the forbidden highrisk procedure. Following the system approach to violations, which describes and relates to only the outcome of an action (or strategy), we find in 38.67% of all cases, a critical system state was detected, which leads to the deduction that the rule must have been violated and the forbidden high-risk procedure was conducted in these cases. The perceived amount of compliance therefore decreases from 86.90% to 61.33%. It was further analyzed how often a particular type of operating procedure actually led to a critical system state. To underline the critical states that were evoked particularly frequently, in ­Figure 3, the percentages of critical states caused by conducting a respective strategy are depicted by the right bars. As an example, the optimizing violation was conducted in 0.3% of all decisions (21 times). This strategy led to a critical system state in 85.7% of all cases (18 times). The cause for the critical system states brought about by conducting one of the strategies within the range of the “safe”-proclaimed lower-risk procedure is reflected in the number of defiant compliance and scrape violations. Both of these violation types caused a critical system state in between 60% and 70% of cases. When considering the frequency of application, we find 68.90% of the total number of critical system states were caused by conducting the lower-risk procedure (in whichever variation). On the other hand, 31.10% of all critical system states were caused by conducting the forbidden high-risk procedure. These data answered the first question regarding the quantity of possible strategies. The conceptually derived strategies were found, and therefore the assumption of an existing spectrum of strategies between violation and compliance is confirmed. 188 June 2016 - Journal of Cognitive Engineering and Decision Making Figure 3. Rounded percentages of chosen strategy, depicted by the left bars, and the respective amount of critical system state evoked by the respective strategy, depicted by the right bars. The Behavior Typology To answer the subsequent research question of whether a behavior typology exists, a cluster analysis was conducted including the dependent variables violation score, failure, and behavior shift. A formal look at the data shows a noticeable change in the cluster coefficient that is searched for to decide the range in which cluster solutions (number of clustered groups) should be considered. Hence, a prominent increase in the cluster coefficient is found from the nine-cluster solution to the eight-cluster solution. Therefore, all solutions from one (nonclustered) to eight clusters are considered for further analysis. To identify appropriate solutions, three parameters are calculated for every cluster solution: explained variance compared with the one-cluster solution (ETA), proportional reduction of error compared with the prior cluster solution (PRE), and the corrected explained variance, which, if not corrected, otherwise automatically increases by rising number of clusters (Fmax). A formally proper cluster solution should show ideally high values within each of the three parameters. Based on these formal parameters, three solutions with adequate properties (listed and highlighted in Table 2) are chosen for a content-based analysis. Table 2: List of Parameters With Formally Appropriate Cluster Solutions in Bold Cluster Solution 1 2 3 4 5 6 7 8 ETA η2 PRE Fmax .00 .01 .43 .46 .47 .63 .73 .75 .00 .01 .43 .05 .03 .30 .27 .07 0.00 1.44 56.34 41.92 33.12 50.21 66.01 62.34 Note. ETA = explained variance compared with the one-cluster solution; PRE = proportional reduction of error compared with the prior cluster solution; Fmax = corrected explained variance. A content-based view on the identified cluster within each cluster solution indicated that the threecluster solution is not sufficiently nuanced to describe different types of rule-related behavior. Another possible solution suggested by the cluster analysis, the seven-cluster solution, contains too many cluster characteristics that resemble each other. Therefore, it is assumed that the six-cluster solution is the formally appropriate choice. A ­content-based look at the manifestations of the three factors within the six cluster groups shows that two Safety-Related Rule Violations 189 Figure 4. Manifestation of cluster characteristics. *p < .05. **p < .01. of the cluster groups are characterized by the same manifestations; hence, the participants in these two clusters were assigned to a joined cluster group. This inductive/deductive interplay considering the strengths and weaknesses of both purely calculated and purely interpreted solutions reveals five clusters with different manifestations of variables that are related to rule-related behavior. These are presented and discussed next. To interpret the identified manifestations within each cluster, it is necessary to determine how a manifested characteristic is to be read. For this purpose, it is stipulated that there is only a low/high reading of the data. It will not be considered how high, or how low, a manifestation turns out to be. In other words, it counts only whether the variable is higher (or lower) than the mean within the nonclustered sample. All of the five identified cluster groups exhibit a unique constellation of manifested factors (­Figure 4). The only manifested factor that does not differ significantly from the nonclustered mean is the behavior shift of Cluster 1, with an almost significant value of p = .056. Any other characteristic is significant, as marked in Figure 4. Further analysis of the person-related variables ascertained is taken into account to identify further characteristics of the types of rulerelated behavior (Figure 5). To depict their manifestations within each cluster comparable within a single figure, the values are z standardized due to their different range. A one-way ANOVA showed no significant differences between the clustered groups for presence, F(4, 148) = 0.49, p = .74; cautiousness, F(4, 143) = 0.49, p = .74; sensitivity toward injustice, F(4, 143) = 1.68, p = .16; and age, F(4, 151) = 0.82, p = .51. For two of the person-related variables, a significant difference between the clusters can be seen. The first is self-interest, F(4, 143) = 2.96, p = .02, and the other is GMA, F(4, 151) = 3.72, p < .01. To gain a deeper insight into the different characteristics between the current clusters, a post hoc analysis (LSD) was also conducted. In Table 3, the mean differences are listed for self-interest in the upper-right part and for GMA in the lower-left part. For the mean differences to be read properly, the left column of Table 3 is to be compared with the heading row (e.g., the mean of Cluster 5 within GMA is, at 7.05, significantly higher than in Cluster 1. If read in the other direction, the algebraic sign changes from plus to minus and vice versa). Cluster 1 (15%). Within this cluster, there is a low tendency to violate rules, and there is little change within this behavior. At the same time, however, participants in this cluster are very ineffective and fail to reach the demanded targets. Following a given rule is a good thing, but participants were not able to fulfill the production goals, and there was no effort to adjust their behavior despite the obvious failure. Therefore, the name the executor is chosen. These individuals merely do as 190 June 2016 - Journal of Cognitive Engineering and Decision Making Figure 5. Standardized values for general mental ability and self-interest within each cluster. Table 3: Mean Differences Between Clusters Within Self-Interest (Upper Right) and General Mental Ability (Lower Left) Cluster 1 Cluster 1 Cluster 2 Cluster 3 Cluster 4 Cluster 5 — 3.26* 3.95* 1.10 7.05** Cluster 2 Cluster 3 Cluster 4 Cluster 5 –0.59* — 0.69 –2.19 3.80* 0.04 0.64** — –2.88 3.10 –0.31 0.28 –0.36 — 5.98* –0.07 0.52* –0.12 0.24 — *p < .05. **p < .01. they are told and continue to do so even if they are ineffective. It is debatable whether the cause of this behavior can be found in the comparatively low GMA. Although this low score would explain the high failure values, the similarly low values for self-interest suggest a low need for change, because the negative effects of insufficient productivity are not personally relevant. Cluster 2 (29%). In this group, there is a very high and stable tendency to violate rules. This behavior is quite reasonable, because these people are successful when breaking the rules. Therefore, they are called the inconvincible, due to the mantra “Never change a running system.” A further indicator for this interpretation is the very high value for self-interest. Cluster 3 (36%). At first glance, the people in this cluster might be seen as the “best behaved.” These individuals follow and never deviate from orders and are good at doing so. For this reason, they will be described as the well behaved. Even if the given rule is not the best one possible, they will not stray from these boundaries and will remain within the limitations of the rules and handle the situation in the best way possible. They constitute the largest group. Their GMA is a little higher than the average, but more interesting is that their value for self-interest is the lowest of all of the groups. They intentionally support the rule-following behavior. Obviously, there is no need to optimize the outcome to their own advantage. Cluster 4 (7%). In this rule-related behavior, a high tendency for violation is paired with a high rate of alteration within the behavior. Furthermore, the behavior shown is characterized by failure. There are conflicting possible interpretations for this behavior. First, consequences might be simply ignored, and individuals within this cluster might merely be searching for the best possible way to gain as much personal profit as possible (promised by violating the rule). Second, the highly variable behavior might be an indication of the attempt to compensate for the failure and the associated Safety-Related Rule Violations financial loss. The key to interpreting this behavior is the violation score. If they had tried to find a way to compensate for the failure, they would not continuously violate the rule but would also try the mandatory and safe rule. Hence, it can be determined that the first interpretation is the most accurate for this cluster, and it is titled the experimenter, who undergoes a high risk. There are only two clusters with an above-average manifestation of the self-interest variable, and this one is the second. This finding underlines the preferred interpretation. Cluster 5 (13%). The final cluster is characterized by an above-average tendency to violate the rule, with a high behavior shift. This behavior is accompanied by a successful performance of the chosen strategy. This group of individuals is called the optimizer. These people are not afraid to bend or break the rule in their search for the best way to execute their task within the boundaries of success. This characterization is supported by incorporating the person-related variables. The optimizing strategy appears to require a high GMA to achieve success. This cluster shows the highest value in GMA. The performance of these persons can be seen as quite separate from any deliberations concerning rules. The tendency to violate due to self-interest is not highly pronounced. Only the outcome seems to be crucial for any behavioral decision. Discussion The purpose of the study was first to identify different strategies of rule-related behavior and subsequently to derive a typology of rule-related behavior. The findings show positive results in both exploratory attempts. The approach of differentiating the understanding of compliance and violation revealed a spectrum of strategies that differ in their extent of violation of safety rules and procedures. Based on the discussion regarding whether a system or person approach to rule-related behavior should be followed, the identified typology shed light on the potential of an approach that combines both perspectives. The following paragraphs will discuss the study’s methodological issues and internal and external validity, necessary for interpreting the multifaceted results, and will conclude with 191 implications for practitioners and perspectives for future research. Methodological Issues The fact that the data originate from a laboratory simulation study rather than field observations is due to the investigated topic of safetyrelated rules. Altering conditions or manipulating objects of interest would be neither legally nor ethically feasible. Indeed, such a manipulation would not be acceptable because, as outlined earlier, the consequences of rule violation can include severe accidents, involving fatalities and momentous environmental damage. The addressed industrial accidents are mainly caused by circumstances ascribed to goal conflicts. To induce this conflict in an experimental setting, we used the participants’ payment. Getting the job done and being paid for production outcome is, at some level, a monetary matter. Although real-world settings are more complex, the operationalization of goal conflict was deployed in the best way possible. A great deal of effort was undertaken to design an experimental setting with the highest possible external and internal validity. WaTrSim was developed by experts in automation engineering at the Technical University of Dresden, Germany, and serves as a realistic model of an actual real-world plant simulating states, dynamics, and interdependences of plant sections and working substances. In terms of Gray’s (2002) description of simulated task environments, WaTrSim can be labeled as a high-fidelity simulation. Gray argues that fidelity is relative to the research question being asked. In contrast, the software does not show characteristics that are perceived and therefore treated as a video game. Assessing WaTrSim in terms of playfulness, we find it does not meet the necessary motives defined by Unger, Goossens, and Becker (2015), who catalog motives for exchange, leadership, handling, competition, acquisition, and experience. Solely the motive for problem solving can be assigned to the simulation. Furthermore, researchers investigating the ability of simulation studies to derive reliable results (Alison et al., 2013; Stone-Romero, 2011; Thibaut, Friedland, & Walker, 1974; Weick, 1965) have argued that results from 192 June 2016 - Journal of Cognitive Engineering and Decision Making s­imulations with high internal validity demonstrate their applicability in the practical field. More specifically, Kessler and Vesterlund (2014) argue that the focus should be on qualitative experimental validity rather than on quantitative validity. In other words, “the emphasis in laboratory studies is to identify the direction rather than the precise magnitude of an effect” (Kessler & Vesterlund, 2014, p. 3). Our assumption of labeling every rule deviance as a violation will be discussed according to this aspect. To prevent errors, participants’ procedural and declarative knowledge was ensured. They were trained twice and handled the plant 22 times before the relevant part of the study occurred. The subsequent 36 performance occasions may also contain some errors, but owing to the preparations we made, we assume that they do not distort the results. Although the specific magnitude might be affected, neither the structure nor the direction should be influenced. Considering the assessed knowledge and attained outcome, it can be assumed that the participants were very well adapted. A satisfactory degree of internal validity can be assumed, partially due to the methodological procedure of identifying clusters. The differences within the dependent variables are the only indicator for building up the cluster. Conversely, the independent variables (cluster affiliation) can be stated as the only known origin of differences in the dependent variables. Moreover, the content-based analysis of the clusters’ characteristics, which are the manifestations of the dependent variables, with regard to the person-related variables self-interest and GMA, indicates that internal validity is given. Common threats, such as history, maturation, or changes in the measurement tool, cannot be assumed due to the short period of observation. The aspect of reactivity, caused particularly by the induced goal conflict, is detected and controlled for by the dependent variables. As participants’ reactions to the exploratory setting are desired, the extent of reactivity is at least partially depicted in the assignment to the respective cluster groups. Indications of satisfactory external validity can be seen in the participants’ presence regarding their perception and handling of the simulation situation. It can be sufficiently assumed that participants were working as they would in a real environment and were acting like actual control room operators; thus, the following deductions are permissible. Key Findings At first glance, the vast amount of safetycritical operations made by the participants might be unexpected and may call into question the realistic image of behavior provided in the study. However, based on investigation reports dealing with accidents on production plants, it sadly has to be concluded that this study does properly depict the circumstances in many work environments. A state of “normalization of deviance” and decreasing safety culture give workers the impression of acting appropriately, although any objective observation would note the opposite (CSB, 2015). One very important lesson that can be gleaned from the present study is that it is necessary to differentiate between rule violations and procedure violations. In many industrial work settings, there are not just rules to follow but also procedures required by the rules. This situation makes it possible to violate the procedure to a certain extent, corresponding to the extent to which the procedure is neglected. If some safety rules are added, the range of the quality of violations is widened, because the procedure now contains safety and non-safety-related substeps. Drawing from these conclusions, we contend that the investigation of violations should incorporate to what part of a rule or procedure the behavior is related. The most interesting strategies of the spectrum of rule-related behavior are defiant compliance and scrape violation. In describing the conceptually possible strategies, these two are characterized as an attempt to compensate for the disadvantages of the extended procedure as well as to optimize the outcome. This finding led to the assumption that most people try to comply with the rule on the one hand but attempt to avoid the personal disadvantages by optimizing the procedure within the boundaries of the given rules on the other hand. Referring to Reason’s (2008) Swiss cheese model, the goal conflict, created by the management, can be seen as a latent factor that weakens the defense layers on Safety-Related Rule Violations the organizational level. The operator’s tendency to optimize the procedure weakens the defense layer at the sharp end, bringing the system into a critical state and making accidents more likely. This assumption can be confirmed by our results. This so-called fine-tuning of the procedures was found in 64.2% of all conducted startups and led to a critical system state in 60% to 70% of cases. Similar to these results, for Russian nuclear power plants, the percentage of violations as cause for operating errors is estimated at 68.8% for 2009, followed by mistakes (20.8%) and slips and lapses (10.4%; Smetnik, 2015). These huge figures demonstrate the risk inherent in this endeavor by the operators to avoid disadvantages and point out the importance of the person approach. Only by considering the actual actions of operators can the factual mechanisms leading to the corresponding consequences be discovered, analyzed, and dealt with. Although the rule was violated in terms of conducting the high-risk procedure in only 14% of all cases, the hazard of this violation should not be underrated. On the contrary, analysis revealed that conducting this strategy led to a probability of between 80% and 99.6% of a critical system state occurring. Even if the frequency is far lower than the frequency of rule compliance, the inherent risk for the system and the workers is incredibly high. With respect to the resulting clusters, it can be noted that there is no highly manifested behavior shift value if the violation score is low. This finding leads to the general conclusion that if rules are followed (low violation score), there is no behavioral adaptation and no actual change in applied strategies, irrespective of the amount of success or failure. Additionally, in both the clusters concerned (1 and 3), the value of self-interest is quite low compared with the values of the cluster with a high violation score. Therefore, it can be concluded that subjects with a low tendency to violate safety rules are simultaneously not very selfish. They will not adapt their behavior or strategies even for their own sake, to save themselves from failing to reach production targets. One important difference between the rulecompliant clusters must be taken into account: They score differently with respect to GMA. The clusters with high failure values score low 193 on GMA, as does Cluster 4, with the high violation score. Accordingly, it can be stated that a successful execution of a complex task requires some cognitive ability. It is difficult to make further interpretations of the interplay between the person-related variables and the manifested values defining the clusters due to the questionable correlative relationship between failure and behavior shift. There is not necessarily a causal flow of failure leading to a change in behavior. A greater variety in behavior can also lead to reduced success, as an operator may be used to changing actions only within a small range and may therefore be inexperienced in applying certain strategies. The most valuable result to be derived from the identified clusters is the unjust disparagement of the violator in general. The types of rulerelated behavior that are found based on the common understanding of compliance and violation in the human error literature are the well behaved and the experimenter. However, there is also compliant behavior that is counterproductive and a violating cluster that potentially improves the process, namely, the optimizer. This result underlines Besnard and Greathead’s (2003) argument stressing the beneficial aspect of violating behavior. The proactive behavior provided by the optimizer is crucial for optimizing industrial processes, also in the sense of safety enhancement (Li, Powell, & Horberry, 2012). Although supporting the organizational production goals is generally desirable, this motivation might lead to the side effect that the optimizer’s positive intent to improve procedure in the short term leads to habitual fine-tuning with safety risks for the organization in the long term. Beyond this finding, Besnard and Greathead’s (2003) approach to safe violations, which takes into account mental resources to gain a mental model, is reflected by the differences between the clusters referring to GMA. This finding emphasizes the necessity for a differentiated view on rule-related behavior that goes beyond the black-and-white compliance-versus-­violation perspective. The typology-like characterization by Hudson et al. (2000) made a step in this direction, implementing not only the apparent event but also the attitude toward violating, in their two-dimensional description. They also took a 194 June 2016 - Journal of Cognitive Engineering and Decision Making further step by thinking in terms of natural-born violators. The results of our work suggest the need for a more differentiated view of traits and give an impression of an interplay between organizational causes (goal conflicts), situational factors (failure or success), and personal factors (mental resources and self-interest). Implications for Further Research In order to justify the applied variables (violation score, failure, and behavior shift), it was necessary to incorporate different approaches, particularly as there is no model to describe and predict behavior in a routine task. To achieve this goal, it is required to formulate a theory that joins together different approaches, purposes, and perspectives or, as called for by Locke and Latham (2004), to “integrate extant theories by using existing meta-analysis to build a megatheory of work motivation.” (p. 388). The result should and would be a process model combining the findings, assumptions, and conclusions of decades of research on human action. The theories and models that were drawn on to identify rule-related behaviors within the present work are suitable to be merged in this sense. Further investigations need to clarify the correlative relationship between behavior shift and failure. Two ways of interaction can be assumed: Owing to high behavioral variability, the learning effect is quite low, so the failure rate rises. Alternatively, due to being unsuccessful, the participants tried to vary their strategies. There are also some differences in results if the system approach or the person approach is followed, as the descriptive results of committed violations demonstrate. The number of rule violations was overestimated by taking only the outcome of the operator’s action into account, which represents the system approach to evaluate the operator’s behavior. By taking into account the person approach, the hazardous but also potentially beneficial (process-optimizing) qualities of different strategies were revealed. It would be worthwhile to pursue and investigate this approach in order to extend our knowledge about roots and causes of behaviors and events. Suggestions for further research can also be found in a study referring to the counterproductive impact of audits on the tendency to violate rules (von der Heyde, Brandhorst, & Kluge, 2015). The study focused on the bomb crater effect, which describes a generally increased tendency to violate rules directly after an executed audit. Regarding the typology, it would be interesting to investigate how the different types act in the sense of the bomb crater effect. It would also be advisable for future studies to validate the clusters’ structure, both in experimental studies and in field observations. Implications for Practice One of the most important aspects of this work is the variety of strategies detected and the resulting types of rule-related behavior. The described types of violating behavior are particularly important in terms of deciding whether or not they should be prevented or promoted. The identified optimizers can have a beneficial impact on an organization’s productivity and can be mistakenly excluded due to misinterpretations of violating behavior. Knowledge on how to identify, support, and integrate them properly would harbor the potential for commercial advantage. The present work provides first indications leading to a cautious recommendation to elaborate on two key aspects. The perspective on workers’ results can be viewed in different ways, the discussed system- or action-oriented perspective. Merging these can lead to the detection of hitherto unknown, but nevertheless relevant, nuances of work behavior. Designing a method that gathers the required information and data can foster the understanding and examination of applied strategies. Based on this method, information that supports human resource policy can be generated. Besides staff assessment and derivation of task requirements, a redesign of audits, notably, safety audits, is suggested. As outlined earlier, a system-oriented perspective can be fragmentary and in some cases even unjust. The aforementioned overestimated number of rule violations and associated fines (as an organizational reaction to rule violation) involves stress and dissatisfaction and may result in complaints or even fluctuation. An alternative approach to safety audits would incorporate a sensible balance of system and person analyses, with the aims of (a) Safety-Related Rule Violations understanding local rationalities and developing guidelines and training for improved performance and (b) preventing negative effects of unjustified fines and imposed sanctions. Acknowledgments This work was supported by the German Research Foundation under Grant KL2207/2-1. References Alison, L., van den Heuvel, C., Waring, S., Power, N., Long, A., O’Hara, T., & Crego, J. (2013). Immersive simulated learning environments for researching critical incidents: A knowledge synthesis of the literature and experiences of studying high-risk strategic decision making. Journal of Cognitive Engineering and Decision Making, 7, 255–272. Alper, S. J., & Karsh, B. T. (2009). A systematic review of safety violations in industry. Accident Analysis & Prevention, 41, 739–754. Ajzen, I. (1991). The theory of planned behavior. Organizational Behavior and Human Decision Processes, 50, 179–211. Besnard, D., & Greathead, D. (2003). A cognitive approach to safe violations. Cognition, Technology & Work, 5, 272–282. Cropanzano, R., Goldman, B., & Folger, R. (2005). Self-interest: Defining and understanding a human motive. Journal of Organizational Behavior, 26, 985–991. Chemical Safety Board. (2011). Experimenting with danger. Retrieved from http://www.csb.gov/csb-releases-new-videoon-laboratory-safety-at-academic-institutions/ Chemical Safety Board. (2015). Final investigation report: Chevron Richmond refinery. Pipe rupture and fire (Report No. 2012-03-i-ca). Retrieved from www.csb.gov/assets/1/19/ Chevron_Final_Investigation_Report_ 2015-01-28.pdf Desai, V. M. (2010). Rule violations and organizational search: A review and extension. International Journal of Management Reviews, 12, 184–200. Frank, B., & Kluge, A. (2014). Development and first validation of the PLBMR for lab-based microworld research. In A. Felnhofer & O. D. Kothgassner (Eds.), Proceedings of the International Society for Presence Research (pp. 31–42). Vienna, Austria: Facultas. Frese, M., & Zapf, D. (1994). Action as the core of work psychology: A German approach. In H. C. Triandis, M. D. Dunette, & L. M. Hough (Eds.), Handbook of industrial and organizational psychology (Vol. 4, pp. 271–340), Palo Alto, CA: Consulting Psychologist Press. Gray, W. D. (2002). Simulated task environments: The role of highfidelity simulations, scaled worlds, synthetic environments, and laboratory tasks in basic and applied cognitive research. Cognitive Science Quarterly, 2, 205–227. Heckhausen, H., & Leppmann, P. K. (1991). Motivation and action. Berlin, Germany: Springer. Hollnagel, E. (2009). The ETTO principle: Efficiency-thoroughness trade-off. Burlington, VT: Ashgate. Hudson, P. T., Verschuur, W. L. G., Parker, D., Lawton, R., & van der Graaf, G. (2000). Bending the rules: Managing violation in the workplace. Paper presented at the International Conference of the Society of Petroleum Engineers. Retrieved from www .energyinst.org.uk/heartsandminds/docs/bending.pdf 195 Kessler, J., & Vesterlund, L. (2014). The external validity of laboratory experiments: The misleading emphasis on quantitative effects. Retrieved from www.pitt.edu/~vester/External_Validity .pdf Kluge, A., Badura, B., & Rietz, C. (2013). Framing effects of production outcomes, the risk of an accident, control beliefs and their effects on safety-related violations in a production context. Journal of Risk Research, 16, 1241–1258. Kluge, A., Badura, B., Urbas, L., & Burkolter, D. (2010). Violations-inducing framing effects of production goals: Conditions under which goal setting leads to neglecting safety-relevant rules. In Proceedings of the Human Factors and Ergonomics Society 54th Annual Meeting (pp. 1895–1899). Santa Monica, CA: Human Factors and Ergonomics Society. Li, X., Powell, M. S., & Horberry, T. (2012). Human factors in control room operations in mineral processing: Elevating control from reactive to proactive. Journal of Cognitive Engineering and Decision Making, 6, 88–111. doi:1555343411432340 Locke, E. A., & Latham, G. P. (2004). What should we do about motivation theory? Six recommendations for the twenty-first century. Academy of Management Review, 29, 388–403. Marcus, B. (2006). Inventar berufsbezogener Einstellungen und Selbsteinschätzungen (IBES) [Inventory of vocational attitudes and self-assessment]. Göttingen, Germany: Hogrefe. Mason, S. (1997). Procedural violations: Causes, costs and cures. In F. Redmill & J. Rajan (Eds.), Human factors in safety-­ critical systems (pp. 287–318). London, UK: Butterworth Heinemann. Mohiyeddini, C., & Montada, L. (2004). “Eigeninteresse” und “Zentralität des Wertes Gerechtigkeit für eigenes Handeln”: Neue Skalen zur Psychologie der Gerechtigkeit [“Self-interest” and “value-centrality of justice for one’s own behavior”: New scales for justice psychology]. Retrieved from http://www .gerechtigkeitsforschung.de/berichte/ Morris, J. (2012, July 26). UCLA researcher’s death draws scrutiny to lab safety. California Watch. Retrieved from http:// californiawatch.org/higher-ed/ucla-researchers-death-drawsscrutiny-lab-safety-17280 Reason, J. T. (1990). Human error. Cambridge, UK: Cambridge University Press. Reason, J. T. (1997). Managing the risks of organizational accidents. Aldershot, UK: Ashgate. Reason, J. T. (2000). Human error: models and management. British Medical Journal, 320, 768–770. Reason, J. T. (2008). The human contribution. Chichester, UK: Ashgate. Schendera, C. (2010). Clusteranalyse mit SPSS: Mit Faktorenanalyse [Cluster analysis with SPSS: With factor analysis]. Munich, Germany: Oldenbourg Wissenschaftsverlag. Schmitt, M., Maes, J., & Schmal, A. (2004). Gerechtigkeit als innerdeutsches Problem: Analyse der Meßeigenschaften von Meßinstrumenten für Einstellungen zu Verteilungsprinzipien, Ungerechtigkeitssensibilität und Glaube an eine gerechte Welt [Justice as an inner-German problem: Analysis of measurement characteristics for attitudes toward distributive principles, sensitivity towards injustice and belief in a just world]. Saarbrücken, Germany: Universitäts- und Landesbibliothek. Smetnik, A. (2015, December). Safety culture associated personnel errors during decommissioning of nuclear facilities. Presentation at the Technical Meeting on Shared Experiences and Lessons Learned From the Application of Different Management System Standards in the Nuclear Industry of IAEA, Vienna, Austria. 196 June 2016 - Journal of Cognitive Engineering and Decision Making Starbuck, W. H., & Milliken, F. J. (1988). Challenger: Fine-tuning the odds until something breaks. Journal of Management Studies, 25, 319–340. Stone-Romero, E. F. (2011). Research strategies in industrial and organizational psychology: Nonexperimental, quasi-experimental, and randomized experimental research in special purpose and nonspecial purpose settings. In S. Zedeck (Ed.), APA handbook of industrial and organizational psychology (pp. 37– 72). Washington, DC: American Psychological Association. Taimin, J. (2011). The Piper Alpha disaster. Retrieved from http:// de.slideshare.net/joeh2012/assignment-piper-alpha Thibaut, J., Friedland, N., & Walker, L. (1974). Compliance with rules: Some social determinants. Journal of Personality and Social Psychology, 30, 792–801. Unger, T., Goossens, J., & Becker, L. (2015). Digitale Serious Games [Digital Serious Games]. In U. Blötz (Ed.), Planspiele und Serious Games in der beruflichen Bildung (pp. 157–186). Bielefeld, Germany: Bertelsmann. von der Heyde, A., Brandhorst, S., & Kluge, A. (2013). Safety related rule violations investigated experimentally: One can only comply with rules one remembers and the higher the fine, the more likely the “soft violations.” In Proceedings of the Human Factors and Ergonomics Society 57th Annual Meeting (pp. 225–229). Santa Monica, CA: Human Factors and Ergonomics Society. von der Heyde, A., Brandhorst, S., & Kluge, A. (2015). The impact of the accuracy of information about audit probabilities on safety-related rule violations and the bomb crater effect. Safety Science, 74, 160–171. von der Heyde, A., Miebach, J., & Kluge, A. (2014). Counterproductive work behaviour in a simulated production context: An exploratory study with personality traits as predictors of safety-related rule violations. Journal of Ergonomics, 4(130). doi:10.4172/2165-7556.1000130 Weick, K. E. (1965). Laboratory experimentation with organizations. In J. G. March (Ed.), Handbook of organizations (pp. 194–260). Chicago, IL: Rand McNally. Whittingham, R. B. (2004). The blame machine: Why human error causes accidents. Oxford, UK: Elsevier. Wonderlic. (2002). Wonderlic Personnel Test. Libertyville, CA: Author. Sebastian Brandhorst is a PhD student at the department of Work, Organizational, and Business Psychology at Ruhr University Bochum. He holds a master of science in applied cognitive and media science. His research focuses on the impact of organizational and individual factors on safety-related rule violations in high-reliability organizations. Annette Kluge is a full professor for Work, Organizational, and Business Psychology at Ruhr University Bochum, Germany. She obtained her diploma in work and organizational psychology at the Technical University Aachen and her doctorate in ergonomics and vocational training at the University of Kassel, Germany, in 1994. Her expertise is in human factors and ergonomics, training science, skill acquisition and retention, safety management, and organizational learning from errors.